I never talk about my birthday, but when your girlfriend makes a cake this epic how can you not brag?!

I never talk about my birthday, but when your girlfriend makes a cake this epic how can you not brag?!

Had my first session of laser tattoo removal today. Found that it was a little more painful that getting a tattoo, but so much quicker. It took longer to go through all the paperwork and discussions at the start of the appointment than it did for the removal itself!

Getting a few small ones on my arm lasered to make way for a sleeve tattoo. I definitely don’t regret those other tattoos I had, just regret the placement. Lesson for next time!

After many, many years of being dissatisfied with having to use a CMS for maintaining my blog I’ve finally switched to a static site generator.

Having to manage a CMS was a total drainer. I don’t want to have to deal with updates, login bruteforcing attacks, or ensuring my site still works because of a change under the hood.

Goodbye, WordPress. You were pretty good, but not the best tool for me. Hello, Hugo.

Hugo is a static site generator written in Go. It’s fast, powerful, and offers a number of conveniences that I really like… which leads me to the following:

I’m using Hugo Pipes to build build my SCSS files, run them through PostCSS, then minify and generate a subresource integrity hash.

It’s so easy in fact, that my entire CSS stack is built during rendering of Hugo:

{{ $css := resources.Get "scss/index.scss" | resources.ToCSS (dict "targetPath" "css/build.css") | resources.PostCSS | resources.Fingerprint "sha512" }}

<link rel="stylesheet" href="{{ $css.Permalink }}" integrity="{{ $css.Data.Integrity }}" />

I love this because it means I don’t have to have a build tool or task runner like webpack, Gulp, Grunt, etc to handle compiling my assets. Every additional dependency used in your site (or project for that matter) is an exponentially increasing burden for you to manage. Maintaining a site should easy!

A number of static site generators I played with had little to no support for HTML

in Markdown files. To me this is an absolute necessity. The trivial convenience of being able to easily add a GitHub Gist to being able to add my own shortcodes to handle HTML

<figure>s was a really big bonus for me. Why some static site generators choose to not allow HTML

or not have support for some sort of shortcode is beyond me.

The Micropub protocol is really taking off. IndieWeb, a community of people aiming to make the web more people-centric (as opposed to more corporate and siloed), came up with the Micropub protocol to make it really easy for one to create, update, and delete content on your own site. This means that even if you change from one CMS to another, or change from a CMS to a static site generator, you can still use the same apps and workflow that you would’ve used previous to the infrastructure transition.

So how does this tie in to the static site generator?

Well, I did what every typical programmer does, and made my own Micropub endpoint. Written in PHP (my go to) and built on the Lumen micro-framework it has support for photo uploads and new posts. My plan is to clean it up a bit and open-source it. Post editing and deleting needs to be added too, but it’s not as important for me.

I’ve gone even more minimalist.

2017 and 2018 were (and are) interesting years for the web. Web tracking and analytics have gone beyond fucking creepy. Sites are becoming increasingly larger per request, with an excess of JavaScript tracking tools burning CPU cycles, draining batteries (if applicable), and chewing through data like there’s no tomorrow. I don’t like that web, and I don’t want to be a part of it.

Just yesterday I was looking at a single-page site built on a popular hosted site builder that weighed in at just under 7.5 MB. Seven point five fucking megabytes. For a single page website with a handful of images and a buttload of JavaScript being injected into the site.

Us developers have a responsibility to make the web a friendly place for all users, regardless of their device or internet speed. There’s no reason a site with predominently text and a few images should take up more than 3 MB. And yet here we are in a time where major sites who well and truly have the budget to invest in a properly-engineered site abuse the internet pipes we’ve come to take for granted. It’s not hard, it just takes empathy for the machine.

Previously this site was long-form content and YouTube music videos only. My thoughts were relegated to third-party platforms like Twitter. Ownership of data is important. Really fucking important. Manton Reece is right on the money when he explains why he made Micro.blog. Algorithmic timelines suck. Ads suck.

I want my content to be portable. To remain on a platform I control. Because I control the entire stack I don’t have to be concerned with the direction of the site, or dissatisfied with how a site is being run.

Because of this, my blog will contain short-form, long-form, image posting, and links. All in the one feed and all in the one spot. I hope you, the reader, like the new direction of the site.

Gabe Newell, of Half-Life fame, humiliating every Half-Life fan still waiting for some sort of follow-up from Half-Life 2: Episode Two.

I wonder if I’ll ever see it in my lifetime.

I think this is the best photo I’ve ever taken. Shot recently in Tasmania with a Nikon D something something.

Redid the blog. It’s built with Hugo and supports Micropub posting with my own Micropub endpoint (which I’ll release soon). Loving the speed I get from this site!

I’ve lived in Perth for just over three years and on this coming Monday I’ll be moving back to my original home of Melbourne, Victoria, and to join my girlfriend (who moved a few weeks earlier for work). It’s been an amazing experience living in one of the most isolated capital cities in the world, and one I will not forget.

Before I moved to Perth I was working as a Mac technician and SME consultant. I’d done web development on and off since 2005 but it was always as a side project and just a bit of fun. I had no intention of continuing to be a technician/SME consultant in Perth so a career change was in order. I’ve done web development as a hobby for a long time, so why not make a career out of it.

Sometimes turning a hobby into a job can take all the fun out of it. I love going to the gym and keeping fit, but I know I would never want to become a personal trainer, let alone work in a gym. Thankfully turning web development from a hobby into a career did not meet that unfortunate demise.

My first job was handling the entire development process where I worked with some amazing talent in Perth. Henry Luong, Daniel Elliot, and Ryan Vincent were all really clever designers and it was a great experience to try and turn their designs into a reality. I knew that I was definitely on the right path, but I found I enjoyed more of the backend process. Dealing with browser quirks for the frontend frustrated me. I knew that I’d prefer to handle the backend side of things, and a year later got the opportunity of a lifetime to work at Humaan.

A defector outside Defectors. Perfect 👌

My last day at Humaan was yesterday but I was there for just under two years. Working with the team there has been an incredible experience and I’ve had the pleasure of working on some really cool projects, one of which is easily the biggest project I’ve ever got to work on. The team at Humaan are amazing and I feel truly honoured to have worked with them all for two years. It was easily the best job I’ve ever had. You know it’s a great job when you’re excited to go to work every day.

Just managed to avoid singeing my hair on the birthday cake.

Awards, awards, and more awards!

Web Directions 2015. My first ever conference. It was amazing!

I’ve made some lifelong friends at Humaan and while it pains me to leave such an experienced team, it’s time to move on back home.

When I moved to Perth and trying to immerse myself into the web scene I found a group on meetup.com called Front End Web Developers Perth (or just Fenders for short). There I met some really amazing people and learnt a tremendous amount of things!

The leader of the group, Mandy Michael (who I can neither confirm nor deny is also Batmandy) deserves special recognition. She built up a community that is super friendly, helpful, and supportive. Mandy gave me opportunities where I got to do a number of presentations, run a workshop, and be a judge for a competition. I am forever thankful to Mandy for those opportunities. I honestly believe the Perth web development community wouldn’t be the same without Mandy’s incredibly hard work and commitment to setting up the group, organising events, and just generally being an all-round superstar. Three cheers to Mandy!

Patima Tantiprasut also deserves a special mention, her infectious energy and personality drives Localhost. I honestly have no idea how Patima manages to do so many things but also remain the most energetic person I’ve ever met. Thank you Pats, you’re awesome!

As I write this it’s Saturday evening. On Monday I fly back to Melbourne and start the next chapter of my life. I’ve got a gig as a developer for a small boutique development agency where I’ll be doing more PHP , Vue.js and probably some Golang! I’m super excited to see what comes next.

Note: this article I wrote originally appeared on the Humaan blog.

Tracking down bugs can be hard. Damn hard. And figuring out how or when they cropped up in the first place can be even harder. Recently, we launched our social media aggregator platform, Waaffle. It’s written in Laravel and deployed with Envoyer, but we also took the opportunity to try out Sentry for tracking bugs.

Spoiler alert: it was awesome.

One of the features I really like is the ability to track releases, which means you can tell at a glance which release was responsible for your bug. We’ve got a deployment hook in Envoyer that pings Sentry when we deploy a new release. Our hook runs in the “After” section of the “Activate New Release” action and contains the following command:

curl https://sentry.io/api/hooks/release/builtin/project_id/project_webhook_token/ \

-X POST -H 'Content-Type: application/json' -d '{"version": "{{ sha }}"}'

(FYI, it’s the very last post-deployment hook we run, just in case another hook fails.)

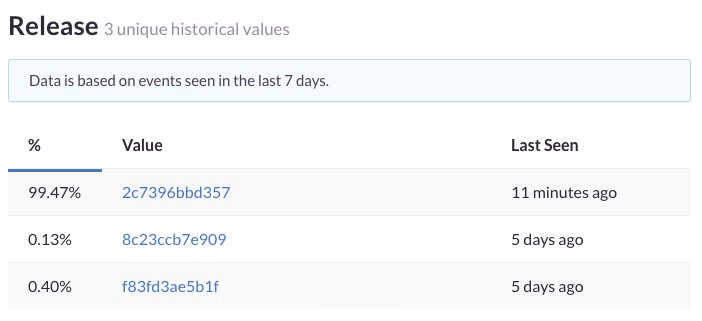

The Sentry PHP package includes a section in the config for specifying your app version. However, things can get tricky if, like us, you’re using, say, git commit hashes instead of version numbers.

Thankfully, Sentry’s Laravel package provides code for a nice release hash you can use for getting your git commit hash:

'release' => trim(exec('git log --pretty="%h" -n1 HEAD')),

Which if you run in Artisan’s tinker mode, gives you something like this:

1a2b3c

Now the problem: we use Envoyer for our deployments, which downloads the tarball of a specified branch/release/tag instead the entire git repository. That tarball doesn’t contain any of the git history in it, so it’s not technically a repository, thus running the Sentry Laravel command won’t give you a commit hash. If you try, you’ll get the following lovely error:

fatal: Not a git repository (or any of the parent directories): .git

Which means if Sentry tracks an error and saves it, you’ll see there’s no release attached to the issue. Drats!

Somehow, we need a way to get the current commit hash from our Envoyer deployments so we can tell Sentry how to get the current commit. Thankfully, Envoyer comes to the rescue!

In the Envoyer docs, under Deployment Hooks, you’ll see a section on how you can get the current git commit hash (or sha1 hash). Now, this hash is actually the first 12 characters of the current git commit hash - aka. something you can use in the deployment lifecycle.

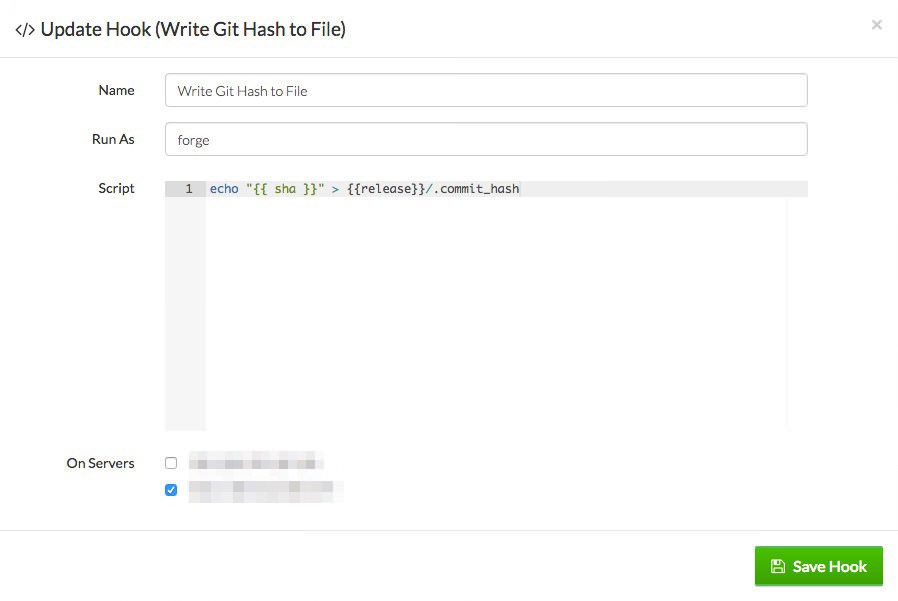

From here, we want to target the current release directory, so let’s create a deployment hook in the “After” section of “Activate New Release” and call it “Write Git Hash to File” with the user “forge”:

echo "{{ sha }}" > {{release}}/.commit_hash

Pretty simple, huh? All it does is echoes the current git hash to stdout (standard output), then we redirect that output to a file called .commit_hash in the current release directory. The single arrow (>) means “set the file .commit_hash to 0 length, then append to it”. If we used two arrows (>>) we’d only append to the file, which we wouldn’t want to do - though technically it doesn’t really matter given the release directory has just been created, but it’s the principle of the thing!

Once we’ve got that file written on every deployment, we need to tell Sentry how to get that commit hash! If you wanted to, you could change the config so Sentry fetches the release, like so:

'release' => trim(exec('echo "$(< .commit_hash)"')),

(Note that I’m using echo and redirection here, I don’t want to get into the UUOC debate!)

Except this only works if you have that .commit_hash file in every environment, which we don’t. So, we’re stuck between a rock and a hard place. What we need is a solution that uses the .commit_hash file if it exists, or falls back to a git repository, or finally, returns null if neither of those exist.

In our project, we’ve got a small helpers file with all functions that exist in the global namespace. That’s where we put our solution:

| <?php | |

| if (!function_exists('get_commit_hash')) { | |

| /** | |

| * Checks to see if we have a .commit_hash file or .git repo and return the hash if we do. | |

| * | |

| * @return null|string | |

| */ | |

| function get_commit_hash() | |

| { | |

| $commitHash = base_path('.commit_hash'); | |

| if (file_exists($commitHash)) { | |

| // See if we have the .commit_hash file | |

| return trim(exec(sprintf('cat %s', $commitHash))); | |

| } elseif (is_dir(base_path('.git'))) { | |

| // Do we have a .git repo? | |

| return trim(exec('git log --pretty="%h" -n1 HEAD')); | |

| } else { | |

| // ¯\_(ツ)_/¯ | |

| return null; | |

| } | |

| } | |

| } |

Pretty basic really - just an if/elseif/else block with three possible outcomes. Note that I’m using the base_path Laravel helper, so we can be sure we’ve got the full path to the file/folder in question. Then, in our Sentry configuration file we have the following for the release:

'release' => get_commit_hash(),

Now whenever Sentry needs the commit hash, it’ll call that function and get its return value. Nice! And for those of you concerned about the overheads of getting the commit hash, you can do a php artisan config:cache so the hash is only calculated once. I highly recommend caching the app configuration as part of your deployment process to help to make things that little bit faster. That file is located in bootstrap/cache/config.php and you can verify the existence of this cached value by doing the following (in the root of the project, with the configuration cached):

grep -n --context=3 \'release\' bootstrap/cache/config.php

You should see something like this:

484- 'sentry' =>

485- array (

486- 'dsn' => 'https://xxxxx:xxxxx@sentry.io/project_id',

487: 'release' => '1a2b3c4d5e6f',

488- ),

489- 'auth' =>

490- array (

(The line numbers to the left indicate where in the file it was found.)

Now you’re set! The next time you do a deployment, you’ll have Sentry correctly logging issues with the current release throughout the life of the project.

Happy tracking, folks!

So I got punched in the face this morning. “Why?” you ask? Because I stepped in to prevent a fight.

Northbridge, the lively hub of Perth, has a bit of an assault problem (funnily enough, the brawl in that article was the exact same place as my incident). The combination of alcohol, drugs, and a culture that promotes fist fighting, in my opinion leads to this anti-social and violent behaviour.

I was on my way home from a work dinner (followed by some 90s pop hits) when two men decided to take it outside, as they say. Normally I wouldn’t be one to get involved in someone else’s fight, but when it occurred literally right next to me, I was unwittingly brought into the situation.

Because the elbow of the windup for the punch meant for the victim almost hit me, I grabbed the arm and started trying to talk him down when he decided to shut me up by punching me in the mouth. I guess it worked because I ended up with my butt on the ground (and with a pair of now broken headphones). I’m very lucky to have not hit my head on the pavement, or on the corner of a table just next to where the incident took place.

Thankfully there were other people around, and some police officers were only about a hundred metres up the road so they were able to quickly intervene and restrain the attacker. After giving my side of the story to the police and knowing that he was heading off to the local police station, I made my way home and called it a night.

Today I went to the doctor to get a checkup to ensure there was no bad damage done to me. Again, thankfully, nothing bad, just a swollen lip and a bit of a sore jaw. Nothing serious at all. But it could’ve been much worse.

Whenever an assault hits the news I often see that people ask for more police presence. In this case, there were uniformed police officers around a hundred metres up the road so how much more police presence does there need to be for it to become a deterrence? Have officers stationed every fifty metres? Doesn’t really sound that practical.

I’m far from an expert in preventing violence so I don’t have any good ideas or thoughts on how to combat anti-social behaviour. Gone are the days where the Good Samaritan can be safe when stepping in to help another person. It’s a shame, really.

Why am I posting this? Mainly because I’m annoyed and frustrated that these kind of incidents keep happening, week after week, day after day. Come on people, let’s be nice to each other. As George Costanza would say:

Note: I originally wrote this post for the Humaan blog.

In case you’ve been living under a rock, LocalStorage is a JavaScript API that allows you to store content in the browser’s cache and access it later on when you need it. Similar to how cookies work, but you’ve got much more than ~4KB (the maximum size for a cookie). That said, while there’s no size limit for each key/value pair in LocalStorage, you’re restricted to around 5-10 MB for each domain. I say 5-10 MB because as per usual, different browsers have different maximum limits. Classic!

Recently we’ve had the opportunity to use LocalStorage for our content. I quite liked it at first because it was very simple to use, but it wasn’t long before we ran into issues. Get this: LocalStorage stores content indefinitely, while SessionStorage stores content for the lifetime of the browser session… and there’s no middle ground. What if I want to store something for 30 minutes? Tough luck!

To make things more interesting, even though some browsers report that they have LocalStorage (I’m looking at you, Safari in Private Browsing mode!) you can’t actually use it (trying to write to/read from it will throw an exception).

Bearing these in mind, we decided to write a small custom library that gives you the ability to use expiries, fail-safe LocalStorage detection, and callbacks for when fetching data doesn’t run as expected.

Just want to see the final result? Here it is.

Let’s run through it!

Our most important method is supportsLocalStorage().

| /** | |

| * Determines whether the browser supports localStorage or not. | |

| * | |

| * This method is required due to iOS Safari in Private Browsing mode incorrectly says it supports localStorage, when it in fact does not. | |

| * | |

| * @kind function | |

| * @function LocalStorage#supportsLocalStorage | |

| * | |

| * @returns {boolean} Returns true if setting and removing a localStorage item is successful, or false if it's not. | |

| */ | |

| supportsLocalStorage: function () { | |

| try { | |

| localStorage.setItem('_', '_'); | |

| localStorage.removeItem('_'); | |

| return true; | |

| } catch (e) { | |

| return false; | |

| } | |

| } |

This method makes sure we can actually use LocalStorage, bypassing the likes of our aforementioned friend, Safari in Private Browsing mode. Here we wrap the LocalStorage set/get in a try/catch statement to catch any read/write exceptions.

Then, we call supportsLocalStorage() at the start of all the other methods to ensure we can actually use LocalStorage. If not, just abort any call early.

Now let’s look at the setter, setItem():

| /** | |

| * Saves an item in localStorage so it can be retrieved later. This method automatically encodes the value as JSON, so you don't have to. If you don't supply a value, this method will return false immediately. | |

| * | |

| * @example <caption>set localStorage that persists until it is manually removed</caption> | |

| * LocalStorage.setItem('key', 'value'); | |

| * | |

| * @example <caption>set localStorage that persists for the next 30 seconds before being busted</caption> | |

| * LocalStorage.setItem('key', 'value', 30); | |

| * | |

| * @kind function | |

| * @function LocalStorage#setItem | |

| * | |

| * @param {!string} key - Name of the key in localStorage. | |

| * @param {!*} value - Value that should be stored in localStorage. Must be able to be encoded/decoded as JSON. Please ensure your object doesn't have the key __expiry as this will accidentally conflict with the expiry handler. | |

| * @param {?int} [expiry=null] - Time in seconds that the localStorage cache should be considered valid. | |

| * @returns {boolean} Returns true if it was stored in localStorage, false otherwise. | |

| */ | |

| setItem: function (key, value, expiry) { | |

| if (!this.supportsLocalStorage() || typeof value === 'undefined' || key === null || value === null) { | |

| return false; | |

| } | |

| if (typeof expiry === 'number') { | |

| value = { | |

| __data: value, | |

| __expiry: Date.now() + (parseInt(expiry) * 1000) | |

| }; | |

| } | |

| try { | |

| localStorage.setItem(key, JSON.stringify(value)); | |

| return true; | |

| } catch (e) { | |

| console.log('Unable to store ' + key + ' in localStorage due to ' + e.name); | |

| return false; | |

| } | |

| } |

Not a particularly complex method when you think about it. The first two parameters are the key and value for the content you want to store. If you’re experienced with JavaScript (or any other language that uses the concept of keys and values with arrays/objects/hashes/etc) you’ll understand what they mean with no problems. The value can be anything that the core JSON object can stringify.

The last parameter, expiry, is an optional numeric parameter that if supplied, is used as the lifespan of your key/value combination. When combined with our LocalStorage getter, we can use this expiry value to know whether we should bust the key/value next time we go to fetch it!

Be warned: there’s not a tremendous amount of validation ensuring legitimate numbers are used as an expiry – just provide an integer for the number of seconds you want your key/value combination remaining valid in the LocalStorage cache. It’s easy, you’ll be fine.

You’ll notice this method returns true without checking whether your item was actually set in LocalStorage. Unfortunately the API itself doesn’t return a “save” response, so we have to assume the best and say that it did. But if this isn’t good enough, you can write a fetch method to confirm your suspicions. To me, that seems like overkill because the ideal time to check your item is when you’re actually fetching the data to use it. LocalStorage, like cookies, can be easily modified by the end user, so don’t assume that just because you saved it, it’ll be there the next time you go to pull it.

Our next very important method is our getter, getItem()!

| /** | |

| * Fetches the value associated with key from localStorage. If the key/value aren't in localStorage, you can optional provide a callback to run as a fallback getter. | |

| * The callback will also be run if the localStorage cache has expired. You can use {@link LocalStorage#setItem} in the callback to save the results from the callback back to localStorage. | |

| * | |

| * @example <caption>Fetch from localStorage with no callback and get the response returned.</caption> | |

| * var response = LocalStorage.getItem('key'); | |

| * | |

| * @example <caption>Fetch from localStorage and handle response in a callback.</caption> | |

| * LocalStorage.getItem('key', function(response) { | |

| * if (response === null) { | |

| * // Nothing in localStorage. | |

| * } else { | |

| * // Got data back from localStorage. | |

| * } | |

| * }); | |

| * | |

| * @kind function | |

| * @function LocalStorage#getItem | |

| * | |

| * @param {!string} key - Name of the key in localStorage. | |

| * @param {?function} [optionalCallback=null] - If you want to handle the response in a callback, provide a callback and check the response. | |

| * @returns {*} Returns null if localStorage isn't supported, or the key/value isn't in localStorage, returns anything if it was in localStorage, or returns a callback if key/value was empty in localStorage and callback was provided. | |

| */ | |

| getItem: function (key, optionalCallback) { | |

| if (!this.supportsLocalStorage()) { | |

| return null; | |

| } | |

| var callback = function (data) { | |

| data = typeof data !== 'undefined' ? data : null; | |

| return typeof optionalCallback === 'function' ? optionalCallback(data) : data; | |

| }; | |

| var value = localStorage.getItem(key); | |

| if (value !== null) { | |

| value = JSON.parse(value); | |

| if (value.hasOwnProperty('__expiry')) { | |

| var expiry = value.__expiry; | |

| var now = Date.now(); | |

| if (now >= expiry) { | |

| this.removeItem(key); | |

| return callback(); | |

| } else { | |

| // Return the data object only. | |

| return callback(value.__data); | |

| } | |

| } else { | |

| // Value doesn't have expiry data, just send it wholesale. | |

| return callback(value); | |

| } | |

| } else { | |

| return callback(); | |

| } | |

| } |

This method is a little more complex. Obviously, the first parameter is the key name for the value you want to retrieve. The second value, however, is a callback you can provide to handle when a value doesn’t exist in LocalStorage. While a callback isn’t technically necessary, because LocalStorage calls aren’t asynchronous, I prefer using them as I feel it makes my code neater.

In your closure, your value in LocalStorage will be returned if it exists. Otherwise, if it doesn’t exist or if it has expired, you’ll get a null return value. This was the cleanest way I could come up with for handling both scenarios, which unfortunately does mean having to do an if/else statement in the closure to check whether the response is null or not.

Finally, our last method is a simple remover method.

| removeItem: function (key) { | |

| if (this.supportsLocalStorage()) { | |

| localStorage.removeItem(key); | |

| } | |

| } |

The native LocalStorage method doesn’t return a status on whether the operation was successful or not, so I mimic the core method by returning void. Again, you could use a getter to ensure data has been removed, but I don’t really see a point in ensuring the core method has worked correctly.

Now that we’ve run through the class and its methods, here it is in its entirety: https://bitbucket.org/snippets/humaanco/gKL7x

| var ls = require('lib/LocalStorage'); | |

| // These examples assume you are using jQuery for AJAX calls. | |

| // Closure usage | |

| ls.getItem('devices', function(devices) { | |

| if (devices === null) { | |

| $.ajax({ | |

| url: '/data/devices.json', | |

| dataType: 'json', | |

| method: 'get' | |

| }).done(function (data, textStatus, jqXHR) { | |

| if (jqXHR.status == 200) { | |

| // Cache response in localStorage for 1 hour. | |

| ls.setItem('devices', data.devices, 3600); | |

| anotherFunction(data.devices); | |

| } | |

| }); | |

| } else { | |

| anotherFunction(devices); | |

| } | |

| }); | |

| // Variable assignment | |

| var devices = ls.getItem('devices', function(devices) { | |

| if (devices === null) { | |

| $.ajax({ | |

| url: '/data/devices.json', | |

| dataType: 'json', | |

| method: 'get' | |

| }).done(function (data, textStatus, jqXHR) { | |

| if (jqXHR.status == 200) { | |

| // Cache response in localStorage for 1 hour. | |

| ls.setItem('devices', data.devices, 3600); | |

| return data.devices; | |

| } | |

| }); | |

| } else { | |

| return devices; | |

| } | |

| }); | |

| doSomething(devices); |

Both of these examples use the expiry feature in the LocalStorage helper. If the devices key/value doesn’t exist in LocalStorage, we’ll fetch it via AJAX and save it to LocalStorage for 1 hour (60 minutes), then either pass it off to another function or return it.

And there you have it! Check the Bitbucket snippet link for the latest updates as we continue to use and refine our LocalStorage helper.