Devastating news about Keith Flint’s passing. The Prodigy have been a favourite of mine for a very long time.

Had a ticket to see them play a month back in Melbourne but was too unwell to attend. I’m shattered.

Devastating news about Keith Flint’s passing. The Prodigy have been a favourite of mine for a very long time.

Had a ticket to see them play a month back in Melbourne but was too unwell to attend. I’m shattered.

Day 3 of my V for Vendetta/Watchmen/The Punisher sleeve done! Absolutely loving the way this is looking so far, can’t wait to have it finished soon.

Been playing a lot of The Witcher 3: Wild Hunt in my downtime recently. Sometimes you need to take a break from working and relax.

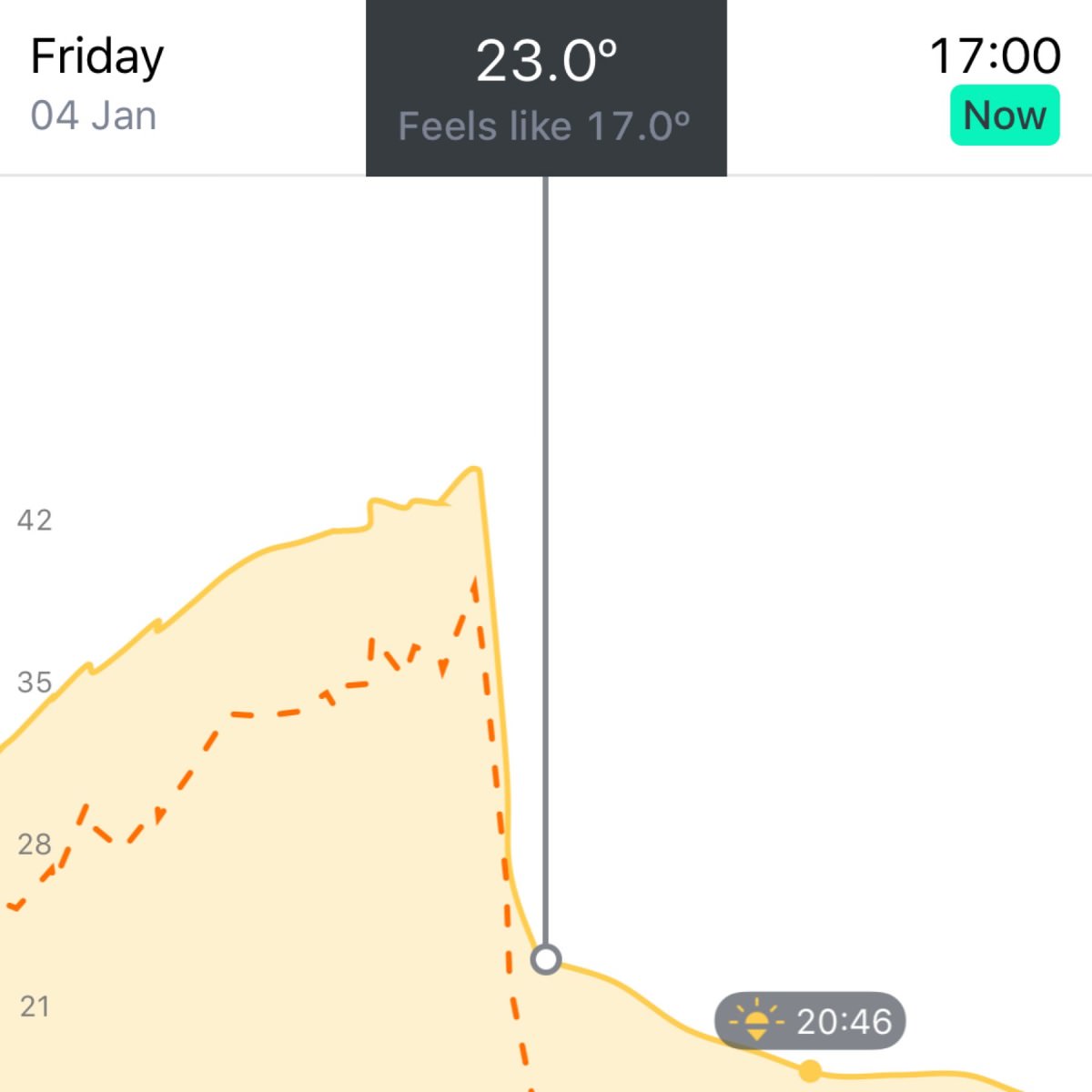

Sweet, sweet respite. Today was a scorcher.

John Sicarusa talking about CrashPlan on the latest episode of ATP reminded me that the cheaper period is just about up.

I’m switching over to Backblaze as it serves my purpose just fine plus isn’t a disgustingly janky Java app.

Just about to sit down for day 2 of my Watchmen/The Punisher/V for Vendetta tattoo sleeve. Dreading the inside upper arm - ahhh the things we do for art.

If you dislike what Facebook is doing, continuing to use Instagram only helps prop up Facebook’s business.

As much as I agree with Manton here, Instagram is the last of the social media networks that I use and keep connected with the outside world.

Instagram is the only app on my phone that I have time limits set up on - no more than 10 minutes a day.

I think the writing is on the wall though. 2019 is the year I quit Instagram for good.

After 551 days straight of meeting my standing goal my series 2 Apple Watch is dying at around 70% battery. As much as I love the Apple Watch I’m pretty disappointed that it’s all of a sudden shutting down at 60%-70% battery.

The Apple Watch is the one device that keeps me tied to the Apple platform. Perhaps this might be the straw that breaks the camels back and pushes me to Android.

Made some small improvements to my Micropub endpoint: removed the coupling to Hugo and made it easier to add a new provider.

Something I should’ve done earlier but had slipped my mind.

When I was redoing my blog many moons ago I decided I didn’t want to use a CMS to write posts in. I wanted to have the ability to use the Micro.blog iOS app or Quill to write my posts in. Doing this meant using a Micropub API endpoint to be my open standard API of choice.

I had two choices: either use an existing API endpoint library or make my own.

Being a programmer I decided on the later. Enter: my Micropub endpoint.

Built on PHP 7.1 and using Laravel’s Lumen micro-framework the API has only a handful of routes (all of which require you to be authenticated).

Currently it supports the following:

/media/)It’s designed to work with Hugo however it’s a piece of cake to add a different provider that works for your needs.

Authentication is handled through IndieAuth and by default my endpoint uses IndieAuth.com to authenticate with. In the configuration of the endpoint you can customise that URL so if you have your own server for IndieAuth (or wish to use a different service) you can do just that.

More features like updating and deleting posts will be added but they’re not a big priority to me at this stage.

Get the Micropub endpoint here

Try it out and post any issues in the GitHub issue tracker, or submit a PR!